In the run up to the conference, we speak with Nadia Piet. With her organization AIxDESIGN, she explores how we should relate to AI. Where popular AI discourse tends to be one-sided and extreme, Nadia Piet is moving away from this. Together with her community, she explores how we can use AI ethically(er) without Big Tech influences, with a smaller ecological footprint and decentralized organization. ‘I’m critical of AI, but also very enthusiastic.‘

Hey Nadia! How are you?

Good! I’m looking forward to the conference. Especially the workshops seem fun!

Let’s talk about AI. Do you have a positive or negative attitude towards AI? How do you approach it?

That’s a good question because I refuse to use this binary opposition of bad and good when it comes to AI. A problem of AI discourse is these extreme binaries we think in: it’s either hype or doomed. It’s leading us to a more polarizing landscape in which people don’t really talk, listen or ask questions. To move forward, we don’t have to pick a side. I’m critical about AI but I’m also very excited about it. We can’t just say ‘AI is great’ and not acknowledge the problems, but we also can’t just say ‘this is the end of the world’ and not acknowledge the other ways of using it and the exciting potential it has. So, I don’t pick sides, and I adopt a non-dualistic approach.

Could you explain your work and the role of AI in it?

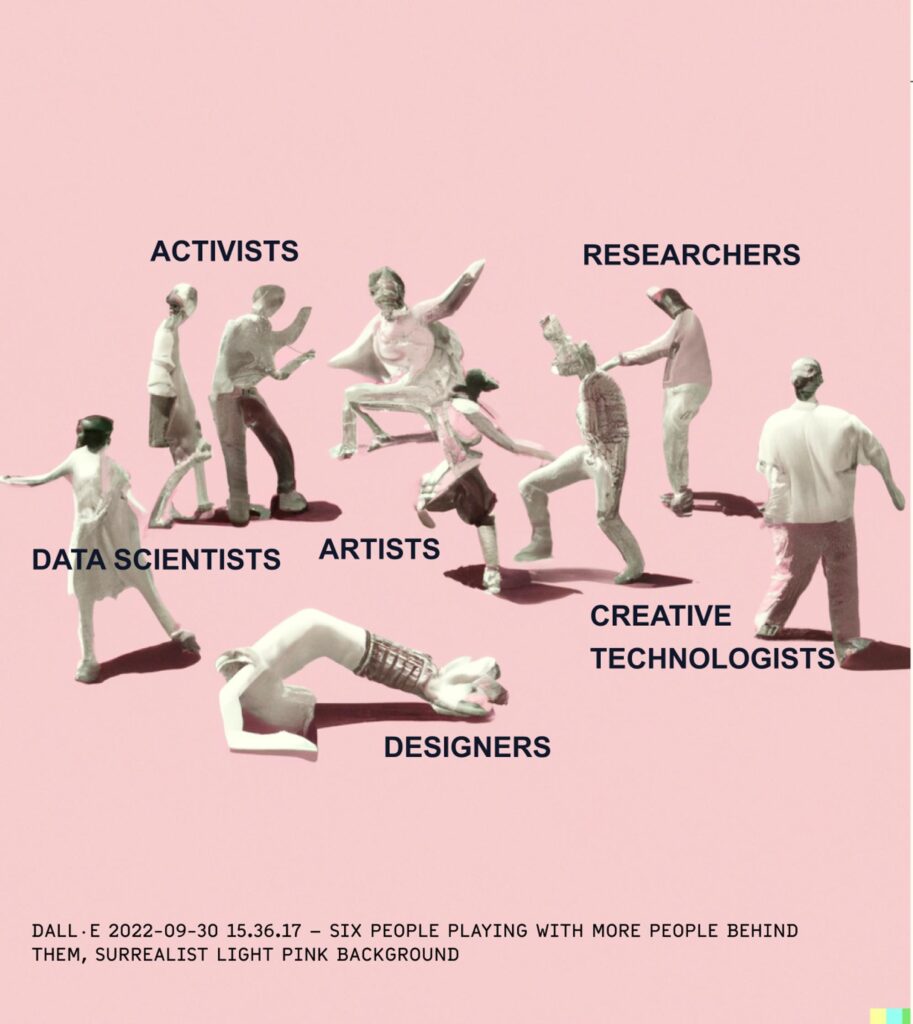

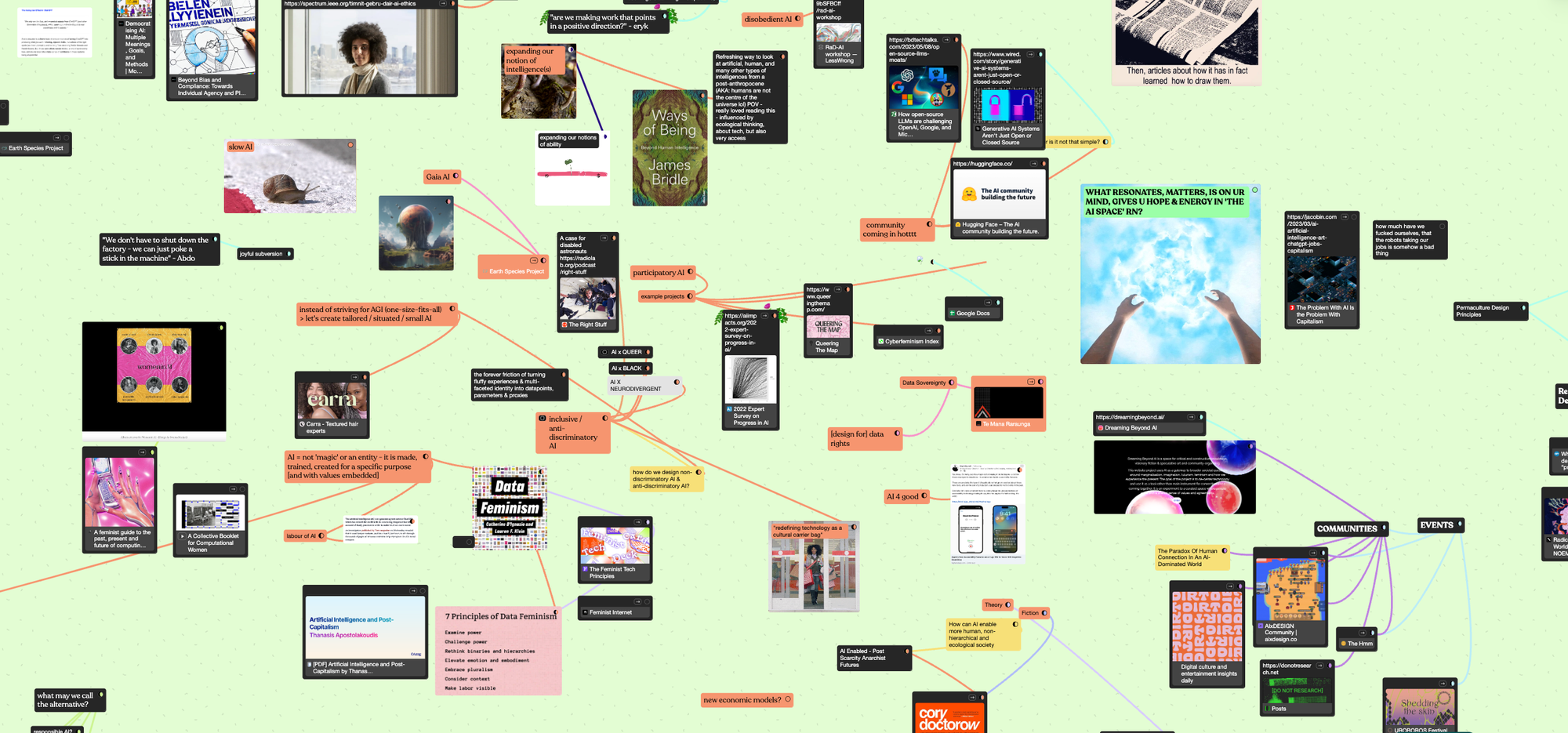

Most of my work now is creative research and design research (so not academic). Through this research, I’m finding ways of relating to emerging technologies and culture. It’s about figuring things out together, about sense-making, about how to relate to these changes, what agency we have, and how we can build the present and future that we want. I mostly do this through AIxDESIGN. This is a global community and research and design studio for critical AI. We host events, run community-led research projects, organize programs, and make public resources to democratize AI literacy and critical discourse, question algorithmic affordances, and prototype and practice new ways of being in relation to AI and technology.

What is critical AI?

We became familiar with this term last year and latched on to it because that’s basically what we do. Critical AI examines AI not just as technology but also as politics, power structures, cultural narratives, and public perception that surround it. When we say AI, we rarely talk about these pivotal systems shaping it. Critical AI builds on critical theory and critical media studies and brings that frame to AI.

Let’s dive into these systems that underlie AI. Which problems do we face here?

So many! The way it is currently built and implemented amplifies the same problems that we have in society: inequality, free labour, and ecological destruction. And it’s not affecting people equally.

Big Tech also largely shapes how we think about and perceive AI, as it owns most of the popular AI tools and dominates narratives of AI harms and benefits, both now and in the future, all heavily influenced by profit-making interests and worrisome ideologies. But there are other ways to do things. There are small AI projects that are very cool and exciting and are doing it totally differently with a different infrastructure.

What are these new infrastructures?

Well, they are not really new. They are often old-school, not shiny and innovative. It’s a more decentralized approach to governance. There are people and organizations that create their own data sets, train and fine-tune their own models, and govern them collectively rather than a centralized company that extracts the data for their own purposes.

“Very few people don’t exactly know what it is, how it works, where it comes from and what it is made of”

You also tackle the problem of AI literacy with AIxDESIGN. What’s the problem here and how do we solve it?

It’s about the digital divide in data and AI. There is a lot of talk about AI, but a lot of people are sidetracked by dramatic headlines in the media, while very few people don’t exactly know what it is, how it works, where it comes from and what it is made of. We want people to have more agency and to be able to have conversations about it because AI is not going anywhere; it stays. Therefore, we have to build some of that literacy. We do that through talks and workshops and embedding it in education. Some basic data science for everyone is needed because, at this point, it is part of everything. Everything is digital.

Let’s say someone creative wants to ethically use AI in their work. What advice do you have for this person?

In case this person would use generative AI – because there are different sorts of AI – it’s important to think about the fact that this type of AI couldn’t exist without a literal extraction of the web. In whichever way you engage with it, it will always be extractive. But so is most technology: your phone, your laptop. That’s something to be mindful of in the first place.

There are ways to do things differently. One of our rules is that when you prompt, you should not use the names of people who are still paying rent. This means don’t take from living artists’ work and livelihood. All modern-day artists are in the data set, but you can decide not to ‘fish from that pond’.

Another tip is running models locally rather than through the cloud and online tools. You can do this with Stable Diffusion, an open-source model, which is easy to install and run locally on your computer. It doesn’t send your data back to the (big tech) organization or cloud, is way less ecologically damaging, and you have more (artistic) control over it.

And if you can, train your own models and use your own data set when you can. There are plenty of initiatives experimenting with this and building tools that make it easier for others to do this themselves, supporting this concept of specific and situated intelligences (opposed to tech billionaire’s dream of General Artificial Intelligence as a ‘one huge model for all’), which we refer to as Small AI.

At AIxDESIGN, we have come to refer to these rules and hacks as Prompt Etiquette; a sort of social and moral compass towards how to use Generative AI in less icky ways.

What message would you like to get across during the conference?

It’s so important to have independent spaces where we can talk, dream, and sense-make about technology together. These ‘third spaces’ are more accessible, communal and creative, existing beyond the ideologies of Big Tech and the conventions of the academic world. Just like PublicSpaces is doing.

At AIxDESIGN we have been doing and committed to this since 2019. We are now slowly maturing as an organisation and still in the process of figuring out how to make our work as a grassroots DIY organisation (financially) sustainable so we can keep doing what we love. My hope for the conference is to connect with other co-conspirators working towards these same ideas, perhaps even meet potential partners and collaborators for AIxDESIGN, and of course, collect some funky stickers.

Curious to learn more? Meet Nadia Piet at the conference during the session “Impact and Use of Generative AI” on Thursday, June 6. In this panel, we will discuss how cultural and social organizations can use AI in public-facing applications in particular. How do you do this responsibly, i.e. with a critical attitude, and constructively? (Language = Dutch)